A new wave of mobile technology is on its way, and will bring drastic change

THE future is already arriving, it is just a question of knowing where to look. On Changshou Road in Shanghai, eagle eyes may spot an odd rectangular object on top of an office block: it is a collection of 128 miniature antennae. Pedestrians in Manhattan can catch a glimpse of apparatus that looks like a video camera on a stand, but jerks around and has a strange, hornlike protrusion where the lens should be. It blasts a narrow beam of radio waves at buildings so they can bounce their way to the receiver. The campus of the University of Surrey in Guildford, England, is dotted with 44 antennae, which form virtual wireless cells that follow a device around.

These antennae are vanguards of a new generation of wireless technologies. Although the previous batch, collectively called “fourth generation”, or 4G, is still being rolled out in many countries, the telecoms industry has already started working on the next, 5G. On February 12th AT&T, America’s second-largest mobile operator, said it would begin testing whether prototype 5G circuitry works indoors, following similar news in September from Verizon, the number one. South Korea wants to have a 5G network up and running when it hosts the Winter Olympics in 2018; Japan wants the same for the summer games in 2020. When the industry holds its annual jamboree, Mobile World Congress, in Barcelona this month, 5G will top the agenda.

Mobile telecoms have come a long way since Martin Cooper of Motorola (pictured), inventor of the DynaTAC, the first commercially available handset, demonstrated it in 1973. In the early 2000s, when 3G technology made web-browsing feasible on mobiles, operators splashed out more than $100 billion on radio-spectrum licences, only to find that the technology most had agreed to use was harder to implement than expected.

The advent of 5G is likely to bring another splurge of investment, just as orders for 4G equipment are peaking. The goal is to be able to offer users no less than the “perception of infinite capacity”, says Rahim Tafazolli, director of the 5G Innovation Centre at the University of Surrey. Rare will be the device that is not wirelessly connected, from self-driving cars and drones to the sensors, industrial machines and household appliances that together constitute the “internet of things” (IoT).

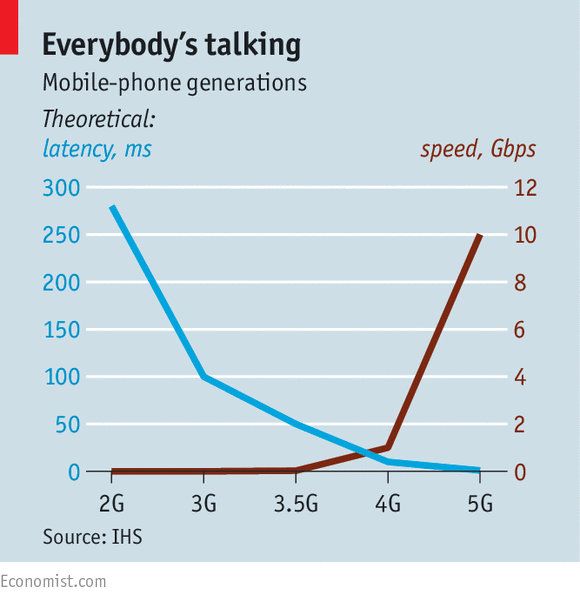

It is easy to dismiss all this as “a lot of hype”, in the words of Kester Mann of CCS Insight, a research firm. When it comes to 5G, much is still up in the air: not only which band of radio spectrum and which wireless technologies will be used, but what standards makers of network gear and handsets will have to comply with. Telecoms firms have reached consensus only on a set of rough “requirements”. The most important are connection speeds of up to 10 gigabits per second and response times (“latency”) of below 1 millisecond (see chart).

Yet the momentum is real. South Korea and Japan are front-runners in wired broadband, and Olympic games are an opportunity to show the world that they intend also to stay ahead in wireless, even if that may mean having to upgrade their 5G networks to comply with a global standard once it is agreed. AT&T and Verizon both invested early in 4G, and would like to lead again with 5G. The market for network equipment has peaked, as recent results from Ericsson and Nokia show, so the makers also need a new generation of products and new groups of customers.

On the demand side, too, pressure is mounting for better wireless infrastructure. The rapid growth in data traffic will continue for the foreseeable future, says Sundeep Rangan of NYU Wireless, a department of New York University. According to one estimate, networks need to be ready for a 1,000-fold increase in data volumes in the first half of the 2020s. And the radio spectrum used by 4G, which mostly sits below 3 gigahertz, is running out, and thus getting more expensive. An auction in America last year raked in $45 billion.

But the path to a 5G wireless paradise will not be smooth. It is not only the usual telecoms suspects who will want a say in this mother of all networks. Media companies will want priority to be given to generous bandwidth, so they can stream films with ever higher resolution. Most IoT firms will not need much bandwidth, but will want their sensors to run on one set of batteries for years—so they will want the 5G standard to put a premium on low power consumption. Online-gaming firms will worry about latency: players will complain if it is too high.

The most important set of new actors, however, are information-technology firms. The likes of Apple, IBM and Samsung have a big interest not only in selling more smartphones and other mobile devices, but also in IoT, which is tipped to generate the next big wave of revenues for them and other companies. Google, which already operates high-speed fibre-optic networks in several American cities and may be tempted to build a wireless one, has shown an interest in 5G. In 2014 it bought Alpental Technologies, a startup which was developing a cheap, high-speed communications service using extremely high radio frequencies, known as “millimetre wave” (mmWave), the spectrum bands above 3 gigahertz where most of 5G is expected to live.

To satisfy all these actors will not be easy, predicts Ulf Ewaldsson, Ericsson’s chief technology officer. Questions over spectrum may be the easiest to solve, in part because the World Radiocommunication Conference, established by international treaty, will settle them. Its last gathering, in November, failed to agree on the frequencies for 5G, but it is expected to do so when it next meets in 2019. It is likely to carve out space in the mmWave bands. Tests such as the one in Manhattan mentioned above, which are conducted by researchers from NYU Wireless, have shown that such bands can be used for 5G: although they are blocked even by thin obstacles, they can be made to bounce around them.

For the first time there will not be competing sets of technical rules, as was the case with 4G, when LTE, now the standard, was initially threatened by WiMax, which was bankrolled by Intel, a chipmaker. Nobody seems willing to play Intel’s role this time around. That said, 5G will be facing a strong competitor, especially indoors: smartphone users are increasingly using Wi-Fi connections for calls and texts as well as data. That means they have ever less need for a mobile connection, no matter how blazingly fast it may be.

Evolution or revolution?

Technology divides the industry in another way, says Stéphane Téral of IHS, a market-research firm. One camp, he says, wants 5G “to take an evolutionary path, use everything they have and make it better.” It includes many existing makers of wireless-network gear and some operators, which want to protect their existing investments and take one step at a time. On February 11th, for instance, Qualcomm, a chip-design firm, introduced the world’s first 4G chip set that allows for data-transmission speeds of up to 1 gigabit per second. It does the trick by using a technique called “carrier aggregation”, which means it can combine up to ten wireless data streams of 100 megabits per second.

The other camp, explains Mr Téral, favours a revolutionary approach: to jump straight to cutting-edge technology. This could mean, for instance, leaving behind the conventional cellular structure of mobile networks, in which a single antenna communicates with all the devices within its cell. Instead, one set of small antennae would send out concentrated radio beams to scan for devices, then a second set would take over as each device comes within reach. It could also mean analysing usage data to predict what kind of connectivity a wireless subscriber will need next and adapt the network accordingly—a technique that the 5G Innovation Centre at the University of Surrey wants to develop.

One of the most outspoken representatives of the revolutionary camp is China Mobile. For Chih-Lin I, its chief scientist, wireless networks, as currently designed, are no longer sustainable. Antennae are using ever more energy to push each extra megabit through the air. Her firm’s position, she says, is based on necessity: as the world’s biggest carrier, with 1.1m 4G base stations and 825m subscribers (more than all the European operators put together), problems with the current network architecture are exacerbated by the firm’s scale. Sceptics suspect there may be an “industrial agenda” at work, that favours Chinese equipment-makers and lowers the patent royalties these have to pay. The more different 5G is from 4G, the higher the chances that China can make its own intellectual property part of the standard.

Whatever the motivation, Ms I’s vision of how 5G networks will ultimately be designed is widely shared. They will not only be “super fast”, she says, but “green and soft”, meaning much less energy-hungry and entirely controlled by software. As with computer systems before them, much of a network’s specialised hardware, such as the processor units that sit alongside each cell tower, will become “virtualised”—that is, it will be replaced with software, making it far easier to reconfigure. Wireless networks will become a bit like computing in the online “cloud”, and in some senses will merge with it, using the same off-the-shelf hardware.

Discussions have already begun about how 5G would change the industry’s structure. One question is whether wireless access will become even more of a commodity, says Chetan Sharma, a telecoms consultant. According to his estimates, operators’ share of total industry revenues has already fallen below 50% in America, with the rest going to mobile services such as Facebook’s smartphone apps, which make money through ads.

The switch to 5G could help the operators reverse that decline by allowing them to do such things as market their own video content. But it is easier to imagine their decline accelerating, turning them into low-margin “dumb pipes”. If so, a further consolidation of an already highly concentrated industry may be inevitable: some countries may be left with just one provider of wireless infrastructure, just as they often have only one provider of water.

If the recent history of IT after the rise of cloud computing is any guide—with the likes of Dell, HP and IBM struggling to keep up—network-equipment makers will also get squeezed. Ericsson and Nokia already make nearly half of their sales by managing networks on behalf of operators. But 5G may finally bring about what has been long talked of, says Bengt Nordstrom of Northstream, another consulting firm: the convergence of the makers of computers and telecoms equipment, as standardisation and low margins force them together. Last year Ericsson formed partnerships first with HP and then with Cisco. Full mergers could follow at some point.

Big, ugly mobile-phone masts will also become harder to spot. Antennae will be more numerous, for sure, but will shrink. Besides the rectangular array that China Mobile is testing in Shanghai, it is also experimenting with smaller, subtler “tiles” that can be combined and, say, embedded into the lettering on the side of a building. In this sense, but few others, the future of mobile telecoms will be invisible.